AI is on the rise.

As a result, you’re probably seeking to leverage the potential of AI for your business success.

But with great power comes great responsibility, and leveraging AI is no exception.

In the fast-paced tech world, we have to recognize how the use of AI can be either advantageous or damaging not just for your business, but for the world at large.

What we do with AI has real consequences. 🌩️

That’s why we have to talk about AI safety and responsibility. 🦺

After all, AI is taking over, whether or not we’re ready.

And believe me, it’s better to be ready.

75.7% of marketers are now using AI tools in their work. As mass adoption ensues, we have to understand what we’re dealing with.

This blog post + video will dive into the thoughts of top entrepreneurs on AI ethics, explore strategies for integrating the human touch alongside artificial intelligence, and help you choose the right tools for safe and accurate content generation.

Ready to navigate the complex landscape of AI safety and responsibility?

Table of Contents: AI Safety and Responsibility

- The Evolution of AI and Its Potential Threat

- The Need for AI Governance, Regulation and Oversight

- Top Entrepreneurs’ Views on Artificial Intelligence

- Supercharging Your Success with AI Safety and Responsibility in Mind

- Conclusion

The Evolution of AI and Its Potential Threat

Artificial intelligence has come a long way since its inception. It has blown past expectations for what it could do or what was possible.

For instance, in the last four years, experts were certain that AI would have great rewriting capabilities. They did not anticipate its generative skills: the ability to come up with new information on its own.

This rapid evolution raises concerns about the potential threat AI poses to humanity if it’s not regulated properly.

Geoffrey Hinton, the “Godfather of Deep Learning,” predicts that AI will soon exceed human abilities in many areas and emphasizes the need for responsible development and use to prevent unintended results.

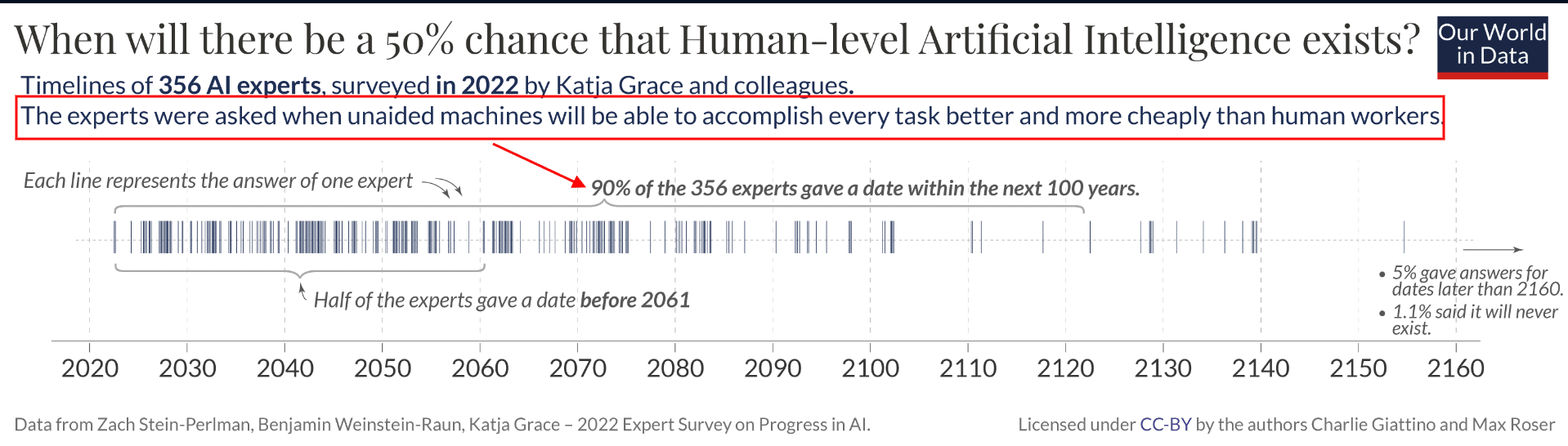

He’s not alone. According to a Our World in Data survey, 90% of experts believe human-level AI is possible in the next 100 years.

One of the main challenges business leaders face in light of human-level AI? Responsible usage, including AI regulation responsibility — setting standards for AI in place, and then following those standards ethically.

Advancements in AI: From 40 Years Ago to Present-Day

The progress made in artificial intelligence over recent decades is astounding – just compare today’s advanced machine learning algorithms with rudimentary expert systems from the 1980s.

We’ve moved from simplistic rule-based structures to highly advanced deep learning algorithms that can process immense amounts of information and make decisions independently.

Today, companies increasingly embed artificial intelligence into their everyday practices, workflows, processes, and communications. AI is everywhere, and it’s only going to go farther and deeper as the tech improves.

A Brief History Lesson: Expert Systems vs. Machine Learning Models

- Expert systems: These early attempts at creating intelligent machines relied on hard-coded rules programmed by humans.

- Machine learning models: Modern approaches involve training neural networks using vast amounts of data, allowing them to learn patterns and make decisions autonomously.

This has resulted in major advances in areas such as natural language processing, computer vision, and recommendation systems.

As AI continues its rapid advancement, we have to prioritize the responsible development and implementation of these technologies.

By doing so, we can harness the power of artificial intelligence for good while mitigating potential risks associated with unchecked growth.

The Need for AI Governance, Regulation, and Oversight

Overwhelmingly, the need for AI regulation responsibility has fallen to companies, businesses, organizations, and business leaders. That’s because the technology is largely being developed by these entities.

Elon Musk, the CEO of SpaceX, Tesla, and Twitter, says AI needs regulation and oversight to avoid becoming a bigger threat than nuclear weapons.

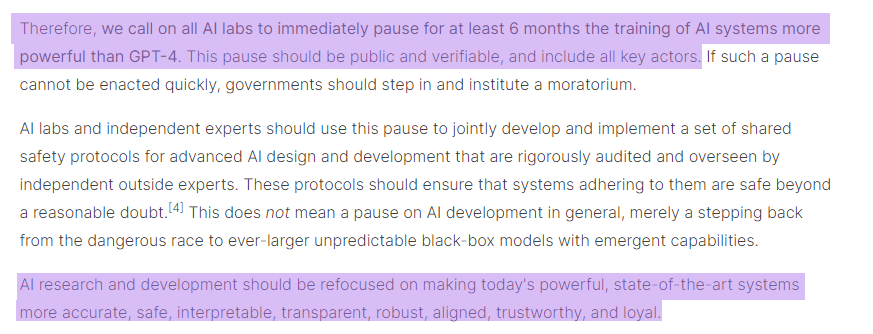

This sentiment is echoed in the open letter he and dozens of other experts, business leaders, and giants from international organizations in the tech world signed. It asked all AI labs to put a six-month pause on the training of AI systems more powerful than GPT-4.

Screenshot from Future of Life Institute

Musk and others like Steve Wozniak (co-founder of Apple), Yoshua Bengio (a Turing prize winner and scientific director at Mila), Stuart Russell (director of the Center for Intelligent Systems), and even the co-founders of Pinterest and Getty Images all co-signed for the following basic principles:

- Regulatory bodies should monitor AI development and set safety standards to mitigate potential dangers.

- Developing formal AI policies and parameters around AI ensures ethical use and minimizes risks associated with unchecked advancements in technology.

The open letter was an initiative of the Future of Life Institute, which develops guidelines to promote responsible research practices within the field of artificial intelligence.

What’s an example of AI regulation responsibility of the type these experts are calling for?

One is the Algorithmic Accountability Act, a bill introduced in the U.S. Senate that would require companies like home loan providers, banks, and job recruitment services to be more transparent about the AI they use to make decisions on finances, employment, and housing for their customers.

Generally, proactive measures toward AI safety and responsibility will help shape a future where artificial intelligence serves as an invaluable tool for growth rather than a risk to humanity.

Top Entrepreneurs’ Views on Artificial Intelligence & AI Regulation Responsibility

Successful entrepreneurs like Alex Hormozi (of Acquisition.com) and Tom Bilyeu (the co-founder of Quest Nutrition and Impact Theory) have expressed concerns about the potential risks of AI, but also believe in its responsible use for business growth.

AI can help businesses scale and improve efficiency, but we can’t rely too heavily on automation without considering the human element involved in decision-making processes.

It’s crucial to view AI as an enhancement rather than a replacement for human capabilities. AI won’t replace you — not yet — but it does have the power to supercharge you. ⚡

That’s why we need to integrate these technologies into our workflows thoughtfully and purposefully. (Enter AI regulation responsibility.)

Don’t forget: AI gives you a HUGE competitive advantage if you use it smartly and responsibly.

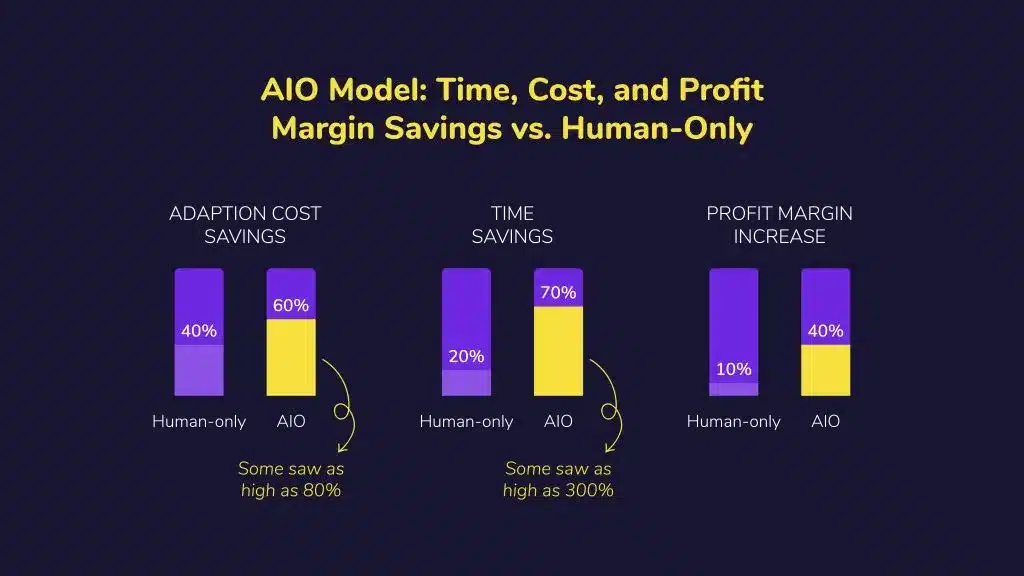

Businesses implementing AI the right way enjoy increased efficiency and reduced costs. In a study we did at Content at Scale on the cost and time savings of the AIO model as compared to human-only content production, our users saw an average of 60% cost savings and 70% time savings.

What is AIO? What’s an AIO writer? Read my guide on AIO.

By embracing responsible AI use, we can harness its power to drive innovation both in our businesses and in the world.

Supercharging Your Success with AI Safety and Responsibility in Mind

AI is not a menace, but rather a helper — and if you work in content marketing or use it for your business, AI presents an opening to better your output and skyrocket your growth.

The key is to use AI tools responsibly and maintain control over your content generation.

- Strategy #1: Integrate the human touch alongside artificial intelligence.

- Retain your unique voice while benefiting from the power of AI technology. I developed a framework for this in terms of content creation: it’s called C.R.A.F.T.

- Strategy #2: Choose responsible AI tools and solutions that prioritize safety, accuracy, and user privacy.

- An example of such a tool is Content at Scale – designed for high-quality content creation without compromising your data security or factual integrity in your content.

- Strategy #3: Stay informed about AI regulation, governance, and ethics.

- Follow international organizations and business leaders advocating for accountability for companies developing AI systems.

Overall, it’s important to make strategic decisions as you choose tools that support your bottom line AND care about AI safety and responsibility. The right tools are built by the right people who care about the effect AI has on the world and how it’s used.

Want to learn how AI can fit seamlessly into your content production pipeline? Get my Content Process Blueprint and learn how.

Maintaining Control Over AI Content Generation

After you select a tool created by responsible people, you need to build a plan for controlling and checking the content your tool produces. With these checks in place, you’ll help thwart issues like the rampant spread of misinformation, and you’ll keep the trust you’ve built with your audience intact. 🤝

- Create clear guidelines for how you want the generated content to align with your brand’s tone, style, and messaging goals.

- Regularly review and edit the AI-generated content to maintain a high level of quality, accuracy, and relevance.

- Keep an eye on AI advancements and modify your approaches in response.

Shaping Our Future Through Conscious Interaction with AI Technology

By now, we know that to get reliable results out of any AI system, we have to feed it accurate information.

AI systems are trained on the data we give them! They learn from interactions with humans as well as human-created content and written works.

ChatGPT is trained on data from the internet and data it collects from user prompts and conversations (with human supervision and filtering — it doesn’t just absorb everything it’s fed automatically).

Accuracy, consistency, completeness, and timeliness are all essential components to consider when assessing the quality of data for machine learning algorithms and deep learning models.

With all that in mind, think carefully about the data you feed an AI tool or system. Consider that your data may be used for training, and it may be integrated into the general “knowledge” of that system.

In a larger sense, if you use AI in a business or team setting, it’s a good idea to educate your team on AI ethics and best practices and answer the larger question: How do we use AI responsibly?

- What tools can we use that are committed to safe and responsible use?

- What data can we feed our AI tools, and what’s off the table?

- What are our AI policies? What can we use it for, and what should we NOT use it for?

- How transparent do we need to be with our audience and customers about our AI use?

By taking these steps towards conscious interaction with technology, we contribute to a better world powered by advanced yet responsible AI solutions. These are also cornerstones for AI regulation responsibility.

Choosing the Right Tools for Safe and Accurate Content Generation

Selecting the right AI-powered tools matters for responsible content generation.

One such tool is Content at Scale – an AI writer designed to create high-quality SEO content without compromising data security or factual integrity.

- Safety: This tool prioritizes user privacy and data protection while generating top-notch content. It only accesses publicly available data to do its job — crawl the web and look at the top of Google to help it generate SEO blog posts.

- Accuracy: One of my biggest concerns with AI content tools was factual accuracy. AI is known to fib and make up facts. Content at Scale was one of the only AI companies that actively addressed this concern — the tool looks for places to add links and citations to content!

- User-friendly Interface: Its intuitive design allows even non-technical users to navigate with ease and produce quality output efficiently.

The right choice of artificial intelligence tools can significantly impact your experience with this technology – both in terms of productivity gains as well as ethical considerations.

Choosing a responsible tool is the first step toward AI safety and responsibility.

Ready to try Content at Scale? Sign up with my link and get 20% extra credits to use toward creating content.

AI Safety and Responsibility Matter Now More Than Ever

The evolution of artificial intelligence is advancing more rapidly than anyone ever dreamed.

That’s why it’s crucial for businesses and organizations to take responsibility. (I’m talking about both general safety as well as contributing to AI regulation responsibility.)

AI is no joke. In less than 100 years, it could reach a human level of cognition. 🤯

That’s scary, but it just means we have to start with safety NOW.

Humans will determine the destiny of artificial intelligence — not the other way around.

Feeding accurate information into AI systems and ensuring responsible use across various industries is crucial for shaping a bright future.

Want to learn more about how to integrate AI into your business the right way?

I updated my mentorship program, The Content Transformation System, to include AI lessons for this reason.

The need is there to understand this technology and leverage it responsibly. And you can do that in your content marketing and business activities to grow beyond what you ever dreamed. 💭

Let’s grow your brand with smart strategies, skills, and systems, with a touch of AI.