Let’s talk about AI content labeling, sometimes known as AI generated content policy. 🏷️

In essence, this is all about marking your content as “AI-generated” so your users understand that artificial intelligence helped you produce it.

Your label can be anything from a byline to a tag, watermark, etc. that indicates AI produced the content.

Since 85.1% of marketers report using AI for article writing…

It makes sense that some businesses and organizations are attempting transparency and using AI content labeling as part of their publishing strategy.

They’re openly telling you, “No, this content was not 100% written by a human.”

However — is AI content labeling even necessary?

….

Not according to Google, and not for written content.

Surprised? (I was, too. 😆)

That’s why, in this post, we’ll take an in-depth look at Google’s approach to labeling AI content (hint: E-E-A-T and UX play a role) and explore the stance of other major players like the European Union.

Table of Contents:

- Should You Label Your AI-Generated Content? Guidelines from Google

- Human-Created Content Has the Upper Hand Over AI… for Now

- Conclusion

Do We Need an AI Generated Content Policy? Guidelines from Google

No, you don’t have to label your written AI content. You don’t need to create an AI generated content policy.

Can Google detect AI generated content or know if content automatically generated occurred?

Google can, but doesn’t require blog posts to be labeled as AI content. Google’s Webmaster Guidelines defines this.

It has to do with how Google treats content generated by artificial intelligence.

It turns out the tech giant doesn’t label AI-generated content differently from human-created material.

This is new information we learned from an event — Google Search Central Live Tokyo 2023 — where top Googlers like Gary Illyes answered questions about the search giant’s stance, recommendations, and more about AI content.

(This might seem surprising considering both Google and the European Union recommend labeling AI-generated images with IPTC photo metadata.

However, images are a different beast when it comes to content. Think deepfakes and other AI-produced images that have the power to fool people completely — Balenciaga Pope, anyone?)

The key point here is that, for written content, Google prioritizes quality over the originator of that content. Quality trumps everything else in Google’s eyes.

No matter who or what generates your text – be it a seasoned copywriter or an advanced algorithm – if it provides value and enhances user experience, you’re on safe ground.

So why isn’t there a requirement for publishers to label their text as created by artificial intelligence?

Because, in essence, such labeling wouldn’t necessarily improve the user experience – which remains at the heart of all things SEO-related.

The European Union’s Stance on Labeling Automatically Generated Content, or Creating an AI Generated Content Policy

On top of Google’s recent position, the EU is advocating for social media outlets to label material generated by AI on a voluntary basis.

The EU’s proposed regulations would require creators to disclose if their work was created or influenced by machine learning algorithms, increasing transparency without stamping “Made by a Robot” on everything in giant screaming letters. (Because, let’s be honest, a LOT of content these days is AI-generated — probably more than we even realize!)

This labeling could have a substantial effect on our perception and engagement with online content — or not.

Adoption of these policies would ensure accountability in our increasingly digitized world, not to mention fight the growing problem of disinformation online.

It’s about being open, responsible, and transparent with how you create and publish content.

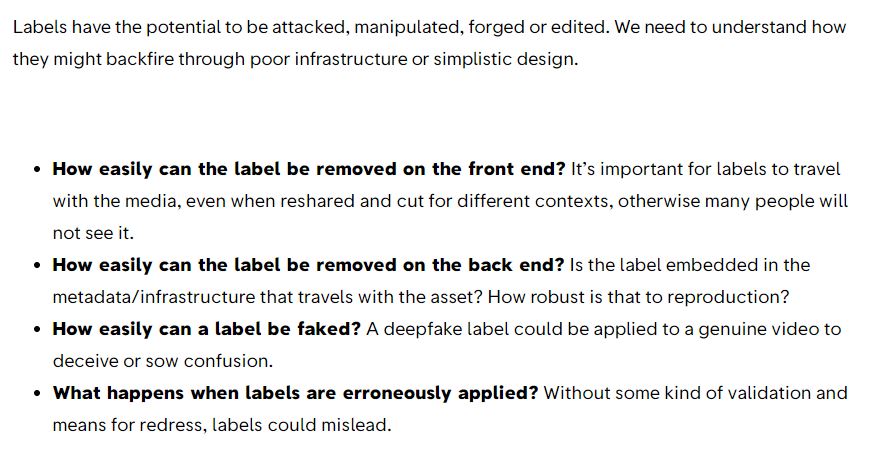

However, we should also keep in mind that this isn’t a black-and-white issue. Labeling ALL content could lead to a slippery slope of unforeseen problems, as this article by First Draft News outlines.

AI Content Doesn’t Need Labeling… It Needs the Human Touch (No Need for an AI Generated Content Policy)

When it comes to publishing content created by artificial intelligence, there’s one golden rule: Don’t just hit the publish button indiscriminately.

AI might be smart, but it still lacks the human touch – that’s why a human review is crucial before any AI-generated or translated content goes live.

The need for a human editor in the loop isn’t about playing gatekeeper – it’s about ensuring quality and maintaining trust with your audience.

Pssst… that’s exactly why I created the C.R.A.F.T. framework for editing your AIO content. ✏️

This step — human edits to your AI content — have become more important than ever, because…

Human-Created Content Has the Upper Hand Over AI… for Now (Without an AI Generated Content Policy)

When it comes to search engine rankings, natural language beats artificially generated text every time.

Why? Because Google’s algorithms are designed around human-created content.

Google’s algorithms try to understand the context and intent behind keywords and phrases, which means original, high-quality content written by humans tends to rank higher than machine-generated texts.

Using AI tools to generate website content may result in lower search engine rankings compared to naturally-written material, according to Gary Illyes from Google Search Central Live Tokyo 2023, who said, “Our algorithms and signals are based on human content.”

Your AI content needs the human touch… but how should you work this into your content process? What tools should you use? How should you strategize?

Learn it all when you grab my Content Process Blueprint.

E-E-A-T Guidelines and AI-Generated Content: A Challenge

So, you’ve heard about E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) – Google’s golden standard for assessing search quality ratings.

But how does this apply to AI-generated content, or creating an AI generated content policy?

Here’s the thing: Artificial intelligence doesn’t have life experiences or expertise in any topic, making it difficult to apply these principles to AI outputs.

Particularly, Google’s Search Quality Raters Guidelines mention that raters should look for evidence of the author’s experience and knowledge on the topic.

This is a problem for AI content.

- No matter how advanced an AI becomes, its inability to possess real-world experience might always limit its ability to meet certain quality thresholds set by guidelines like E-E-A-T.

- If you’re using AI tools for generating your website content, ensure there is human intervention before publishing – editing and proofreading can go a long way in ensuring higher standards of trustworthiness and authority.

Evolving Policies Around AI Content

Advanced machine learning systems are gaining traction in news media outlets, but purely algorithmic outputs often lack the trustworthiness associated with human-generated content.

Many organizations have hit the brakes on their AI endeavors, slowing down this trend considerably.

Policy-making circles are buzzing with discussions about AI usage across different sectors, focusing on developing comprehensive guidelines that strike a balance between leveraging technology and maintaining quality standards.

For instance, the European Union proposes stringent rules for high-risk AI applications.

So, what are the evolving policies around AI usage in mainstream media production?

- Acknowledge the potential benefits of automation but also recognize its limitations;

- Carefully consider ethical implications such as bias and misinformation;

- Maintain transparency by labeling AI-generated content where possible; and,

- Ensure human oversight remains integral to any automated process.

To sum up: We’re living through a momentous transition in time thanks to sophisticated machine-learning technologies, and the future promises exciting possibilities if we navigate it wisely.

AI Content Labeling Doesn’t Matter if You Aim to Help Your Readers

If we learned anything new today, it’s that Google doesn’t care if you label your AI content as long as your intent behind publishing it is to help your readers.

In other words, if you’re a “good faith actor” — if you’re publishing AI content with quality in mind and include your human touch at some point in the creation process — AI labeling isn’t necessary.

Instead, think about when AI labeling will help your user experience. When might they need to know that AI created the content, if ever?

Use common sense. Some examples:

- You created a post with AI and published it without edits. (Whether or not the post is good, you should disclose that it was 100% AI-produced somewhere on the page.)

- You’re sharing a deepfake (like the infamous Pope photo we mentioned above, or one of those astonishing Tom Cruise AI videos). You should reiterate that these aren’t real to avoid spreading disinformation.

Needless to say, navigating this new AI-filled world can be intimidating and confusing — especially if you create content for your business or for clients.

If you need education, advice, and mentorship on how to incorporate AI successfully into your content, you need my Content Transformation System.

This program helps you get clear on not just your business skills and goals, but also how to do content marketing that helps you grow.

It’s the full package for the new (or established!) business owner or entrepreneur: curriculum, community, monthly live calls, and coaching.

Ready to get started? Apply today.